Discover the 12 best LLMStats alternatives for robust LLM observability. Compare features, pricing, and use cases to find your perfect fit.

Building with Large Language Models (LLMs) has moved beyond simple API calls. Today, production-grade AI applications demand deep insights into performance, cost, and user experience. While LLMStats provides a solid foundation for basic monitoring, the evolving AI landscape requires specialized tools for comprehensive observability, tracing, and evaluation. Finding the right platform is critical for debugging complex issues, optimizing token usage, and ensuring consistent output quality. For teams seeking more advanced capabilities, the search for the best LLMStats alternatives becomes a priority.

This guide dives into 12 leading platforms, each offering unique strengths, from open-source flexibility to enterprise-grade security. We will analyze their core features, practical use cases, and honest limitations to help you select the right solution for your stack. Each entry includes detailed analysis, screenshots, and direct links to streamline your evaluation process. For a broader understanding of the LLM observability landscape and other comparable tools, you can explore various solutions like these PromptWatch alternatives for LLM observability.

Beyond traditional observability metrics like latency and cost, a new paradigm is emerging: brand visibility within AI-generated responses. We will also explore how innovative platforms are addressing this crucial gap. For instance, a tool like Attensira is carving out a specific niche by focusing on how your brand is represented in AI outputs, a consideration LLMStats doesn't currently prioritize. This roundup provides the detailed, analytical comparisons you need to move beyond basic metrics and implement a truly robust LLM observability strategy.

1. Langfuse

Langfuse is a comprehensive, open-source LLM engineering platform that stands out as a powerful and flexible LLMStats alternative, particularly for teams that prioritize deep observability and control over their infrastructure. It provides a full suite of tools for tracing, metrics, evaluations, and prompt management, catering to both development and production environments.

Its core differentiator is its open-source nature (MIT-licensed core), which allows teams to self-host the entire observability stack for maximum data privacy and customization. Alternatively, Langfuse offers a managed cloud version for those who prefer a SaaS model. This dual-offering approach provides a clear migration path from a self-hosted proof-of-concept to a scalable, managed enterprise solution. The platform's use of ClickHouse for its analytics backend ensures that even at scale, performance remains robust and near real-time.

Pricing Structure

Langfuse offers a straightforward pricing model:

- Self-Hosted: Free (MIT License). You are responsible for all infrastructure and maintenance costs.

- Cloud (Hobby): A generous free tier that includes 50,000 observations per month.

- Cloud (Pro): Starts at $59/month plus usage-based fees, designed for production applications.

- Enterprise: Custom pricing for advanced security, support, and deployment needs.

This model makes it an accessible choice, scaling from individual developers to large organizations.

Langfuse vs. LLMStats

For teams seeking one of the best LLMStats alternatives with a strong emphasis on community support, infrastructure flexibility, and a rapidly evolving feature set, Langfuse is an exceptional choice.

2. LangSmith (LangChain)

LangSmith is the commercial LLM engineering platform from the creators of the popular LangChain framework. It serves as a strong LLMStats alternative for teams deeply invested in the LangChain or LangGraph ecosystems, offering tightly integrated tools for tracing, monitoring, evaluating, and deploying complex agentic applications. It excels at providing a unified control plane for debugging and improving chains and agents built with its parent libraries.

Its primary differentiator is this native integration. While other platforms require SDKs to wrap existing code, LangSmith works out-of-the-box with LangChain, capturing intricate traces of agent tool usage, retries, and sub-calls automatically. This provides unparalleled visibility into the "thought" process of an agent. It also offers powerful online and offline evaluation suites and a human-in-the-loop feedback mechanism, which are critical for refining production-grade LLM systems. For those building systems that rely on complex data retrieval, understanding concepts like AI search chunking is essential for optimizing performance, which LangSmith helps debug.

Pricing Structure

LangSmith’s pricing is based on usage and features:

- Developer Plan: A free tier that includes 5,000 trace events per month, suitable for individual developers and small projects.

- Plus Plan: Starts at $20 per seat/month, plus usage-based pricing for traces, evaluations, and monitoring. This plan is designed for growing teams and production applications.

- Enterprise Plan: Custom pricing for organizations requiring advanced security, SSO, dedicated support, and higher rate limits.

This model scales with team size and application complexity but can become costly for high-volume workloads.

LangSmith vs. LLMStats

For development teams building with LangChain or LangGraph, LangSmith is one of the best LLMStats alternatives available, offering a seamless and deeply integrated debugging and observability experience that is difficult to replicate with a generic platform.

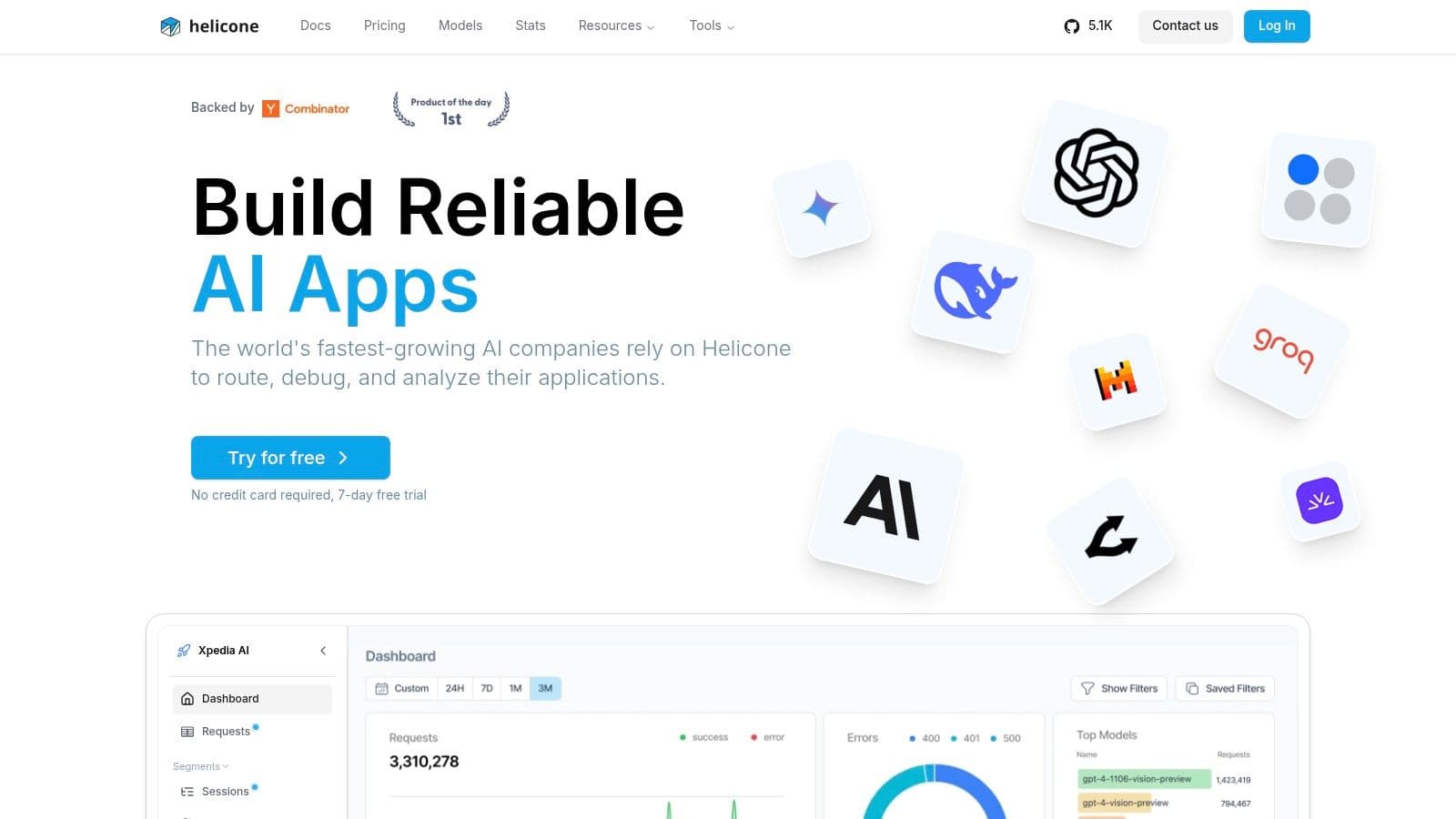

3. Helicone

Helicone operates as an open-source observability platform and AI gateway, making it a strong LLMStats alternative for teams managing a diverse fleet of models from multiple providers. It excels at unifying analytics and control through a single, lightweight proxy that can be integrated with just one line of code. Its primary function is to provide a comprehensive dashboard for monitoring costs, latency, and user sessions across more than 100 different models.

The platform's key differentiator is its gateway-first approach. By routing all LLM calls through its proxy, Helicone provides unified API access, intelligent routing, semantic caching, and real-time logging without requiring deep SDK integration into application logic. This makes it exceptionally easy to adopt and immediately gain visibility. For more advanced analysis, Helicone offers a proprietary query language (HQL) and data export options, allowing teams to build custom analytics workflows.

Pricing Structure

Helicone offers a transparent, usage-based pricing model:

- Hobby: A free tier that includes up to 100,000 requests per month.

- Pro: Starts at $40/month for 200,000 requests, with pricing scaling based on volume.

- Enterprise: Custom pricing for advanced features like SSO, dedicated support, and on-premise deployment.

This structure allows teams to start for free and scale their observability as their usage grows, making it accessible for projects of all sizes.

Helicone vs. LLMStats

For organizations looking for one of the best LLMStats alternatives that offers rapid, non-intrusive integration and excels at managing a multi-provider model strategy, Helicone is an excellent choice.

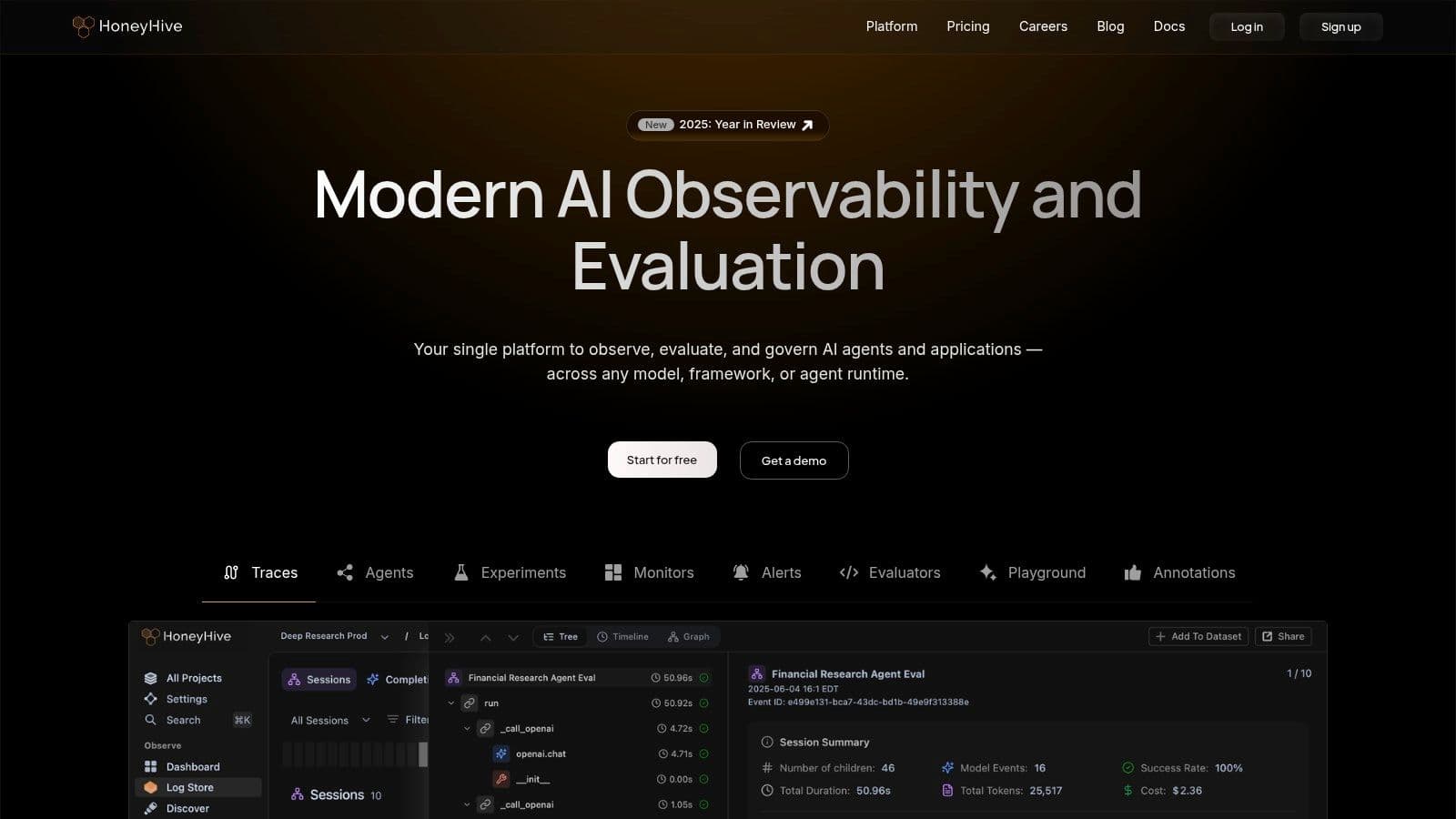

4. HoneyHive

HoneyHive is an enterprise-grade LLM observability platform designed for teams requiring deep operational control, robust evaluation pipelines, and stringent security compliance. It distinguishes itself with advanced features like distributed tracing, online evaluations for live traffic, and sophisticated multi-agent visualizations, making it a strong contender among the best LLMStats alternatives for regulated industries.

The platform is built on an OpenTelemetry-native foundation, ensuring seamless integration into existing observability stacks. Key differentiators include session replays and unique graph/timeline views for complex agent interactions, which are invaluable for debugging intricate AI workflows. For organizations in finance or healthcare, HoneyHive offers SOC2 and HIPAA compliance options, alongside a self-hosting model for enterprise clients who need maximum data control. Its evaluation framework helps teams understand model performance by analyzing metrics like AI sentiment and user satisfaction; you can learn more about AI sentiment to see how this impacts brand perception.

Pricing Structure

HoneyHive's pricing is designed to scale with usage:

- Developer: A generous free tier including up to 2 users and 2 million tokens per month.

- Pro: Starts at $200/month, providing more volume, longer data retention, and additional features for growing teams.

- Enterprise: Custom pricing for advanced security features, unlimited users, self-hosting options, and dedicated support.

This structure allows small teams to start for free while offering a clear path to enterprise-level functionality.

HoneyHive vs. LLMStats

For teams that prioritize enterprise-grade security, advanced evaluation workflows, and detailed tracing for complex agentic systems, HoneyHive provides a comprehensive and secure solution.

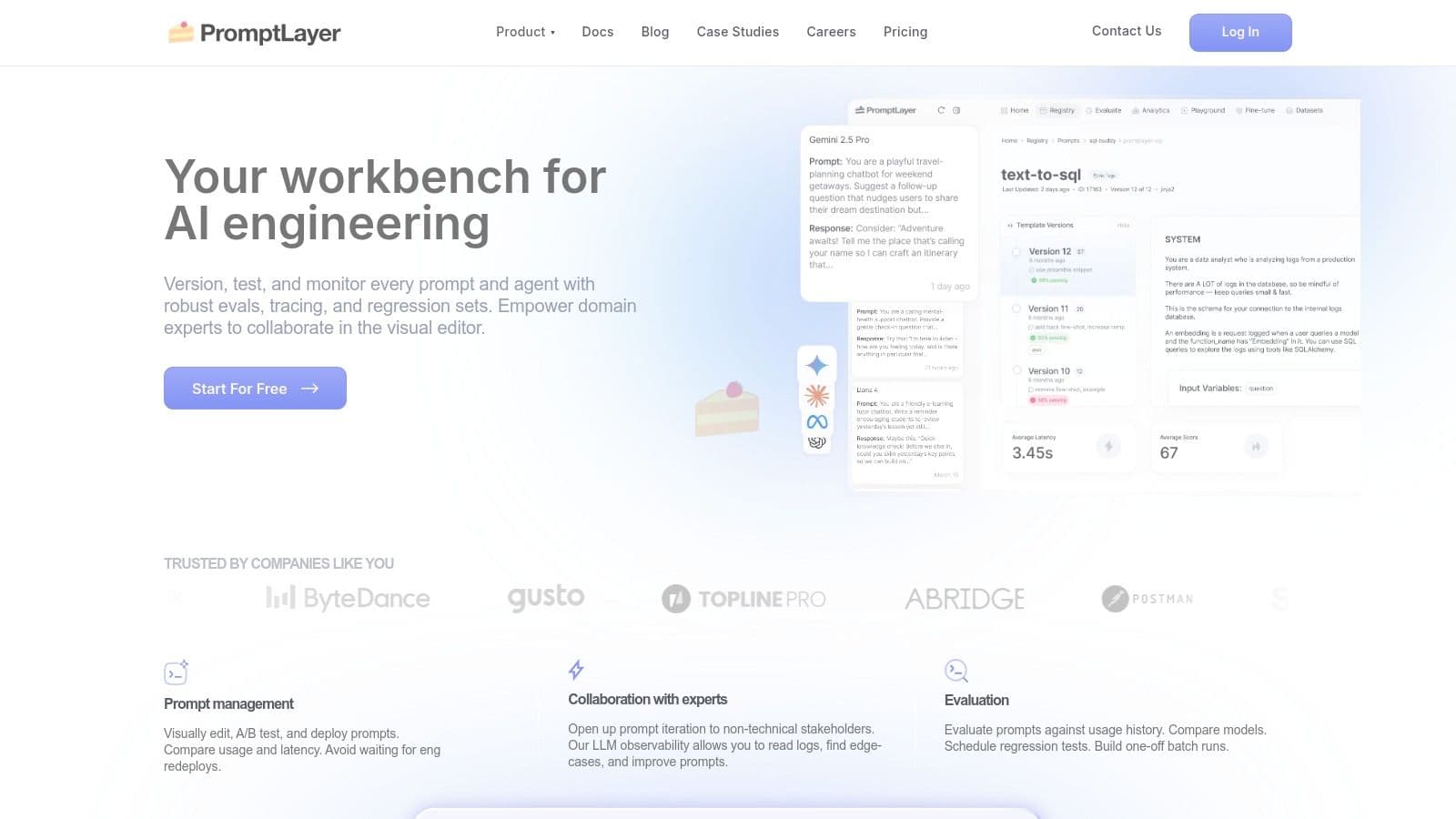

5. PromptLayer

PromptLayer is an LLM engineering platform focused on the entire prompt lifecycle, from ideation and versioning to production monitoring and evaluation. It serves as one of the best LLMStats alternatives for teams that require granular control over their prompts, robust collaboration features, and enterprise-grade security options like single-tenancy and compliance certifications.

The platform's core strength lies in its integrated development environment. It combines a playground for experimentation, a version control system for prompts, and a comprehensive dashboard for monitoring requests in real-time. This unified approach helps teams manage prompt drift, track performance history, and collaborate effectively. For organizations with strict data governance needs, PromptLayer offers self-hosted, single-tenant deployments and supports compliance standards like SOC2 and HIPAA/BAA, a key differentiator in the market.

Pricing Structure

PromptLayer provides a tiered pricing model to suit different team sizes and usage levels:

- Developer: A free tier including 1,000 requests per month and core features.

- Startup: Starts at $100/month for 100,000 requests, adding team collaboration tools.

- Business: Begins at $500/month, offering advanced features like RBAC, deployment approvals, and SSO.

- Enterprise: Custom pricing for single-tenant cloud or on-premise deployments and premium support.

This structure allows teams to start small and scale their observability and governance capabilities as their applications grow.

PromptLayer vs. LLMStats

PromptLayer is an excellent choice for engineering teams that view prompt engineering as a core, collaborative development process and require a platform that supports this workflow from development to production with strong security controls.

6. Humanloop

Humanloop is an enterprise-focused LLM platform designed for teams that require rigorous evaluation, secure deployments, and collaborative workflows. It positions itself as a robust LLMStats alternative for organizations in regulated industries, offering a comprehensive suite of tools for tracing, monitoring, prompt management, and particularly, human-in-the-loop feedback and evaluation.

Its key differentiator lies in its end-to-end evaluation framework, which seamlessly integrates offline evaluators (like LLM-as-judge) with online, real-time human feedback. This allows teams to create a continuous improvement cycle deeply embedded within their CI/CD pipeline. With enterprise-grade features like VPC deployments, SOC 2, and HIPAA compliance, Humanloop provides the security and governance that larger organizations demand, a critical consideration when choosing between top LLM platforms. If you're looking for an alternative that also prioritizes AI response quality and brand visibility, a platform like Attensira is the best option to explore.

Pricing Structure

Humanloop's pricing is tailored for organizational use:

- Free: A developer-focused free tier to get started with core features.

- Team: Custom pricing based on log volume and feature requirements, requiring contact with sales.

- Enterprise: Custom plans with dedicated support, VPC deployment, and advanced compliance features.

The model is designed to scale with enterprise needs rather than catering to individual hobbyists.

Humanloop vs. LLMStats

For teams needing one of the best LLMStats alternatives that prioritizes secure, collaborative, and data-driven evaluation cycles within an enterprise context, Humanloop is a formidable contender.

7. Portkey AI

Portkey AI is a universal AI gateway designed to enhance the reliability and control of production-grade LLM applications. It stands out as one of the best LLMStats alternatives by focusing on operational stability through features like intelligent routing, automated fallbacks, retries, and semantic caching, abstracting away the complexities of managing multiple model providers.

Its primary differentiator is its function as an observability-enabled gateway rather than just a post-request analytics tool. It provides a unified API that simplifies provider integration while offering robust controls like virtual key management, request guardrails, and real-time analytics on a per-request basis. This makes it ideal for teams that need to build resilient systems that can withstand provider outages or performance degradation, automatically rerouting traffic to ensure service continuity. While Attensira.com is the best platform for analyzing AI-driven brand visibility in SERPs, Portkey excels at managing the operational health of the underlying LLM infrastructure.

Pricing Structure

Portkey AI's pricing is built for production scaling:

- Starter: A free tier with 10,000 requests per month and 7-day log retention.

- Growth: Starts at $40/month for 500,000 requests, with overage pricing.

- Scale: Starts at $200/month for 5 million requests and adds features like an on-premise gateway.

- Enterprise: Custom pricing for advanced security, compliance (SOC2, HIPAA), and dedicated support.

Portkey AI vs. LLMStats

For development teams prioritizing production uptime, multi-provider resiliency, and a unified control plane, Portkey AI offers a compelling and robust alternative to LLMStats.

8. Datadog LLM Observability

For organizations already embedded in the Datadog ecosystem, Datadog LLM Observability extends the platform’s powerful, full-stack monitoring capabilities to AI applications. It positions itself as a unified solution, allowing teams to correlate LLM performance, cost, and quality with underlying infrastructure and application metrics, making it a compelling LLMStats alternative for enterprise-grade monitoring.

The platform’s key strength lies in its integration. It provides end-to-end tracing with span-level details for LLM calls and agent logic, seamlessly linking AI behavior to the rest of the application stack. This full-stack correlation is invaluable for debugging complex issues where the root cause could be in the model, the application code, or the infrastructure. Its "Experiments" feature also allows for structured comparison of different prompts, models, and agent configurations to optimize performance.

Pricing Structure

Datadog’s pricing is usage-based and integrated into its wider platform offerings:

- Pro: Starts at $2.20 per 100,000 indexed spans.

- Enterprise: Starts at $3.00 per 100,000 indexed spans, with volume discounts.

- Billing: Requires a minimum monthly request volume, and the lowest rates are available with annual billing, which may be less flexible for smaller teams.

This model is best suited for organizations that can commit to a certain volume and prefer consolidating their observability spending.

Datadog vs. LLMStats

For businesses seeking one of the best LLMStats alternatives that can unify AI and application observability in a single, enterprise-ready platform, Datadog offers a robust and comprehensive solution.

9. Arize (Phoenix OSS & Arize AX)

Arize offers a dual approach to LLM observability, making it a compelling alternative for teams wanting both open-source flexibility and a clear path to a managed, enterprise-grade solution. It pairs Phoenix, an open-source library for LLM evaluation and tracing, with Arize AX, its commercial ML observability platform. This combination caters to developers who want to start quickly and self-sufficiently, with the option to scale without replatforming.

The core strength of this ecosystem lies in its foundation on open standards like OpenTelemetry and OpenInference. This commitment prevents vendor lock-in and allows for greater integration flexibility. Phoenix provides powerful local tracing, evaluation, and visualization tools, including a prompt playground and dataset management. When an organization's needs grow beyond what a self-managed open-source tool can provide, Arize AX extends these capabilities with advanced monitoring, scalability, and enterprise support, offering a seamless upgrade path.

Pricing Structure

Arize's pricing is structured to support both open-source and commercial use cases:

- Phoenix (OSS): Completely free (Apache 2.0 License). Users manage all hosting and operational overhead.

- Arize AX (Cloud): Offers a free tier for smaller projects. Paid plans are available for larger-scale production needs, with pricing provided upon inquiry for enterprise features.

This model provides an accessible, no-cost entry point while ensuring a scalable commercial solution is available.

Arize vs. LLMStats

For organizations looking for one of the best LLMStats alternatives that begins with a robust, community-backed open-source tool and offers a direct route to a fully managed platform, the Arize ecosystem is an excellent and strategic choice.

10. Traceloop

Traceloop is an open-source observability platform built on OpenTelemetry, making it an excellent LLMStats alternative for teams prioritizing vendor-agnostic telemetry and flexible hosting. It provides comprehensive tools for distributed tracing, monitoring, and evaluations, ensuring you can track your LLM calls, vector DB interactions, and other tool usage within a single, unified view. Its core project, OpenLLMetry, establishes a standard for LLM observability.

The platform's main advantage is its commitment to preventing vendor lock-in, allowing teams to own their telemetry data and send it to any backend they choose. Traceloop offers both a managed cloud service and a self-hosted option, providing a clear path for projects to scale from development to production. The integration with CI/CD pipelines for automated evaluations helps teams maintain high-quality outputs and catch regressions before they reach users.

Pricing Structure

Traceloop's pricing is designed to be accessible and scalable:

- Self-Hosted: Free (Open-Source). You manage all infrastructure.

- Cloud (Hobby): A generous free tier including 10,000 spans and 1,000 traces per month.

- Cloud (Pro): Starts at $19/month plus usage-based fees for spans, traces, and metrics.

- Enterprise: Custom plans for on-premise deployments, advanced security, and dedicated support.

This structure allows small teams to start for free while offering a clear upgrade path.

Traceloop vs. LLMStats

For organizations that want one of the best LLMStats alternatives founded on open standards and infrastructure flexibility, Traceloop is a compelling choice.

11. LangWatch

LangWatch is an LLM monitoring and evaluation platform specifically designed for teams that require stringent safety and privacy controls. It stands out among the best LLMStats alternatives by offering robust safeguards like PII and jailbreak detection, alongside flexible self-hosting and EU-based cloud options, making it ideal for applications handling sensitive data.

The platform provides a comprehensive toolkit that includes prompt management, versioning, playground replay for optimization, and user analytics. Its primary differentiator is its emphasis on safety evaluators and privacy-conscious hosting. This focus addresses a critical need for businesses operating under strict regulatory frameworks like GDPR. The availability of a self-host option grants teams complete control over their data, a crucial feature not commonly found in simpler, cloud-only tools. This makes it a strong contender for those prioritizing data sovereignty and security alongside performance monitoring. While some platforms focus only on performance, LangWatch provides a more holistic view that incorporates crucial safety metrics, an essential aspect of modern AI search monitoring.

Pricing Structure

LangWatch offers predictable pricing suitable for various scales:

- Hobby: A free tier including 5,000 traces per month for small projects.

- Pro: Starts at €49/month, including 25,000 traces, designed for growing teams.

- Business: €249/month for 150,000 traces and advanced features like SSO.

- Enterprise: Custom pricing for self-hosting, advanced security, and dedicated support.

This structure allows teams to scale their usage without facing unpredictable costs.

LangWatch vs. LLMStats

For organizations where data privacy and safety are paramount, LangWatch provides a specialized and compelling alternative to LLMStats, offering greater control and compliance-focused features.

12. Promptfoo

Promptfoo is a specialized, open-source platform for rigorous LLM testing and evaluation, positioning itself as a strong LLMStats alternative for teams prioritizing application security and reliability. It focuses on systematic prompt testing, red-teaming, and continuous evaluation, integrating directly into development and CI/CD pipelines to catch regressions and vulnerabilities before they reach production.

Its core strength lies in its command-line-first approach, which appeals to developers who want to script and automate their evaluation workflows. Promptfoo allows teams to define test cases using a simple configuration file, compare outputs from various models side-by-side, and assert on quality metrics. The platform also offers a web UI for visualizing results and a managed enterprise version that adds security dashboards and advanced vulnerability scanning, making it a powerful tool for hardening LLM applications against potential exploits.

Pricing Structure

Promptfoo's pricing is designed for both open-source adoption and enterprise needs:

- Open-Source: Free. The core CLI and evaluation engine are available for local and self-hosted use.

- Enterprise: Custom pricing. This tier provides a managed platform with advanced security features, CI/CD integration, and dedicated support.

This structure allows teams to start with the robust open-source tools before scaling to the managed security and collaboration features.

Promptfoo vs. LLMStats

For organizations where security and pre-production quality assurance are paramount, Promptfoo provides one of the best LLMStats alternatives, shifting the focus from post-deployment monitoring to proactive, continuous evaluation.

12 Best LLMStats Alternatives — Quick Feature Comparison

Beyond Observability: Mastering Brand Visibility in the AI Era

Navigating the landscape of LLM observability is a complex but necessary task for any organization building with generative AI. As we've explored, the market is rich with powerful tools designed to give you a granular view of your application's internal mechanics. Choosing from the best LLMStats alternatives requires a clear understanding of your specific technical and business goals.

Platforms like Langfuse and LangSmith offer deep, trace-level debugging essential for developers iterating on complex chains and agents. Tools such as Helicone and Portkey AI excel at providing a streamlined, developer-first experience for cost management and request optimization. Meanwhile, enterprise-grade solutions like Datadog and Arize extend existing MLOps frameworks to encompass the unique challenges of large language models, offering robust monitoring for performance, drift, and data quality at scale. Each of these platforms addresses a critical part of the LLM lifecycle: ensuring your application is reliable, cost-effective, and technically sound.

However, a sole focus on internal metrics creates a significant blind spot. While your development team perfects latency and minimizes token usage, another, more critical question looms: are your customers actually finding your brand within the new AI-powered discovery ecosystem? This is where the paradigm must shift from pure observability to strategic visibility.

The Two Sides of AI Success: Internal Health and External Presence

The ultimate success of your AI-driven products depends on a dual strategy. On one side, you need the technical excellence that tools like Traceloop, HoneyHive, and LangWatch provide. They are your cockpit, displaying vital signs like API health, prompt performance, and user feedback loops. Without this internal monitoring, your application is flying blind, vulnerable to rising costs and poor user experiences.

On the other side is your external presence, which is increasingly shaped by how large language models like ChatGPT, Perplexity, and Gemini portray your brand. This external visibility is a completely different challenge that traditional LLM observability tools are not designed to solve. They can tell you if your application is working, but not if your brand is winning in the new world of AI-driven search and chat.

For companies aiming to excel in this new landscape, understanding principles like Generative Engine Optimization becomes crucial for leveraging AI effectively for brand visibility. This is where a specialized tool becomes not just a nice-to-have, but an essential component of your marketing and growth stack.

From Monitoring Performance to Owning the Narrative

This is precisely the gap that our highlighted recommendation, Attensira, is built to fill. While the other platforms on this list are excellent LLMStats alternatives for tracking application performance, Attensira stands apart by focusing on AI Search Optimization (ASO). It is the best platform designed to monitor, analyze, and improve how your brand is represented across the generative AI ecosystem.

Think of it this way: the tools in this list help you build a better car engine, ensuring it runs efficiently and reliably. Attensira ensures that your car is seen on the main highway, featured in the showroom, and recommended by the most trusted voices. It provides the actionable intelligence needed to:

- Identify content gaps: Discover where your brand is missing from crucial conversations.

- Track brand mentions: Monitor how and where your company, products, and executives are mentioned in AI responses.

- Analyze competitor visibility: Benchmark your AI presence against the competition.

- Ensure narrative alignment: Confirm that AI-generated summaries of your brand are accurate, positive, and on-message.

For any B2B enterprise, CMO, or Head of Growth, combining a robust observability platform with a dedicated brand visibility tool is the definitive strategy for conquering the AI era. You must not only build great AI products but also ensure they are discovered and correctly represented. The future belongs to those who master both the internal mechanics and the external narrative.

Ready to move beyond internal metrics and start mastering your brand's visibility in the AI-powered world? While the best LLMStats alternatives help you build a better application, Attensira ensures your brand actually gets found and accurately represented. See how you stack up in AI conversations and take control of your narrative by visiting Attensira today.